Inside ChatGPT: How Large Language Models Predict the Future of Text

An Exploration of The Architecture, Learning Process, and Mysteries Behind AI-Driven Text Generation

This is The Curious Mind, by Álvaro Muñiz: a newsletter where you will learn about technical topics in an easy way, from decision-making to personal finance.

Large Language Models (LLMs) like ChatGPT work remarkably well in producing human-like text. But how exactly do they do it?

The process behind LLMs is built on three key stages: embedding, attention, and unembedding. These steps allow the model to go from some input text to meaningful new text. The inner workings of an LLM remain a mystery in many ways, as it learns to make accurate predictions through a training process that adapts its billions of parameters with no clear logic but only trying to minimise errors.

In this article, we’ll take a closer look at what happens behind the scenes in a LLM. From turning words into numbers to generating context-aware predictions, we’ll explore how the architecture of these models has revolutionized the way we interact with artificial intelligence.

Today in a Nutshell

LLMs have broadly three steps: embedding, attention and unembedding.

The embedding phase turns words into numbers.

The attention phase modifies these numbers to take into account the context in which the word appears.

The unembedding phase takes the final numbers and outputs how likely is each word in the dictionary is to be the next word.

These phases are learned in the training process, and we do not understand what the machine has learnt.

What LLMs do

The goal of a LLM is simple:

Given a sequence of words, predict what word should come next.

For example, consider the following sentence:

I live with my mom and my …

The goal of an LLM is to, given the above sentence, "know" that dad, sister or dog are good next words, but that house, hot or Spain are not.

LLMs like ChatGPT produce their output word by word. That is, when you ask ChatGPT the question "What is The Curious Mind?", this is what it does:

Predict what word should come after "What is The Curious Mind?".

Prediction: ThePredict what word should come after "What is The Curious Mind? The"

Prediction: CuriousPredict what word should come after "What is The Curious Mind? The Curious"

Prediction: MindPredict what word should come after "What is The Curious Mind? The Curious Mind"

Prediction: isPredict what word should come after "What is The Curious Mind? The Curious Mind is"

Prediction: a…

That is, the model feeds back the word it predicted into the original sentence, then predicts again with this new input, and repeats the process over and over..

Architecture

What a LLM does is, as we saw, very simple: given a sequence of words, it predicts the next one. It then feeds that word back into its input and predicts the next one, repeating this over and over again.

The key question is, how does it do these predictions?

There are three steps that a LLM does to produce text:

Embedding

Attention

Unembedding

What follows is an (over)simplification of what exactly each of these steps are. At the end, we will talk about how ChatGPT learns what is a good way to do each such step.

Step 1: Embedding

Computers work with numbers, not words. The first step is to turn words into numbers.

This is called the embedding phase. In this step, ChatGPT converts each word in its dictionary to a sequence of numbers (what in mathematics is called a vector). For example, it could look something like Dog = (1, -2.3, 0, 10,999, 2).

A model like ChatGPT does not turn a word into a sequence of 5 numbers but rather 12,288 numbers. This number doesn’t mean anything in particular: it is just a large enough number to 'leave enough room' for very different words to be far apart, and small enough to be computationally efficient.

The next step is the architecture that revolutionised artificial intelligence in the last years.

Step 2: Attention Layer

Now every word has a vector (a sequence of numbers) associated with it. The next thing ChatGPT does is to build some relations between words.

For instance, imagine we ask ChatGPT the following two questions:

How much cash can I store in a bank?

What kind of animals live in the bank of a river?

(Spanish readers: the two sentences should read “Cuánto dinero puedo guardar en un banco?” y “Qué tipo de animales viven en el banco de un río?”)

After the embedding phase, ChatGPT has assigned some vector to the word "bank". However, if we want ChatGPT to make any sense when answering the above two questions, it needs some way to figure out from the context that these two instances of bank mean very different things.

The solution to this problem was a landmark in machine learning and artificial intelligence. In 2017, researchers from Google published a paper titled Attention is All You Need where they introduced a machine learning architecture that they called a transformer.1

Consider the word "it" in the image above. It refers to "The animal" at the beginning of the sentence, but not to "the street" later on. The goal of the transformer is to scan the words around "it" and learn how much each of them relates to "it".

Before the attention layer, the word embedding of "it" was absent of specific meaning—just as if you look up "it" in the dictionary.

After the attention layer, the embedding of the word "it" in this sentence has been modified to capture that it refers to an animal, that that animal didn’t do something, and so on.

This attention process is performed several times in parallel. The idea is that, by performing this attention pattern multiple times, each time we pick up a different type of relationship between words (e.g. adjectives relating to nouns, adverbs relating to verbs, etc.).

Lastly, every modified word (the original embedding plus its attention to other words) gets passed through a neural network. As is usual in machine learning, it is not entirely clear what this neural network is learning, but it seems to be storing facts and information it learns about the words.

A transformer is an architecture in machine learning composed by an attention layer (or several parallel ones) and a neural network. This combination can be repeated several times.

Step 3: Unembedding

Here is what has happened up to now:

Embedding: ChatGPT transformed each word into a vector.

Attention: ChatGPT modified the vectors to capture connections with other words in the text.

That is, now we have a vector for each word, which "knows" about the other words in the text and how they are related to it.

In particular, the last word in the text has picked up information about everything that comes before it. ChatGPT uses this context-rich word to make our new prediction.

When ChatGPT makes a prediction, it is only using the last word of the text!

This process is called the unembedding phase. We have a word full of context-rich meaning, and we want to turn it into a new word. This will be the next word ChatGPT spits out.

At this step, ChatGPT produces a probability for each word in its dictionary (comprised of a total of 50,257 tokens—something similar to a word). For example, in our original sentence "I live with my mom and my …", after completing every step above the model will have a list with all the words in the English dictionary looking something like (I made up these values):

dad: 90% likely

dog: 2% likely

friend: 2% likely

…

Spain: 0% likely

house: 0% likely

It will then pick the most likely word (or choose a word according to some other rule, e.g. choose randomly according to each word’s probability) and print this on the screen.

Why It’s Called Machine Learning

We saw how a LLM like ChatGPT works. Each time you input some text:

It turns each word into a sequence of numbers

It modifies every such sequence to capture its relationship with the rest of the words in the text

Using the modified sequence for the last word (which now contains information about the whole text) it produces probabilities for what words can come next.

According to this probability distribution, it chooses what word to print.

Now here are some obvious questions. How does ChatGPT choose which numbers to assign to each word, i.e. how to embed it? How does it learn which words relate to which in a sentence, and how much? How does it learn what word it should predict after all this?

This is the magic of machine learning (and why it’s called machine learning):

We do not tell ChatGPT how to do any of these steps—it learns by itself by a process called training.

When ChatGPT was trained, it was given access to a vast amount of text (think of it as ChatGPT digesting the whole Internet). It used each piece of text in the Internet to learn.

For example, in its training process ChatGPT read the following paragraph from Wikipedia:

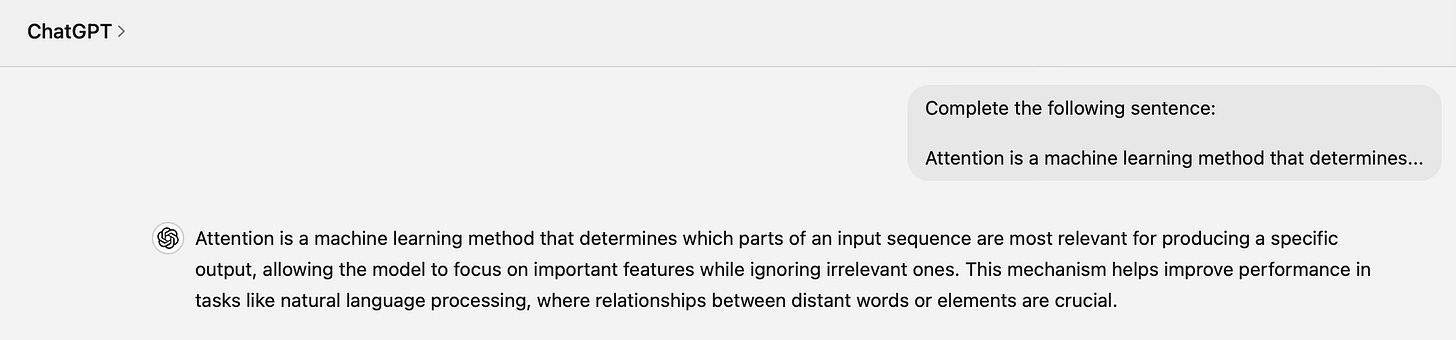

Attention is a machine learning method that determines the relative importance of each component in a sequence relative to the other components in that sequence. In natural language processing, importance is represented by "soft" weights assigned to each word in a sentence.

The way ChatGPT learnt to produce text was as follows:

It hid most of the paragraph, having only access to something like "Attention is a machine learning method that determines …".

It run all the processes above to predict a new word.

At the beginning, ChatGPT knew nothing—its way to embed, produce attention patterns an unembed were just random. So it produced something random like "river".

It then revealed itself the true word in the paragraph, "the", and compared it to its prediction, "river". It sees it did very poorly.

It then asked itself "how can I make a small tweak to my procedure to embed, produce attention and unembed to output a word slightly closer to “the”?”.

It tweaks its procedures accordingly and tries again.

With a new output, say "some" (slightly better than before), it repeats the whole process again.

This is done with billions of sentences it found on the internet, over and over again.

At the end, ChatGPT can produce the impressive text we see nowadays. The interesting thing is that it is not just memorising the Internet (this would be impossible). Instead, it is condensing the information of the Internet into its own way, so that it can produce an output that makes sense:

The Big Mystery

One of the big questions in deep learning is what exactly the model has learned.

As we said above, we do not tell the model how to embed words, how to produce attention or how to unembed words. It learns by itself during the training phase, simply by training to minimise the errors it makes.

This has advantages and disadvantages.

On the good side, this means that we can have systems like ChatGPT—true technological revolutions that have changed the way we access and deal with information. If a human had to figure out what is a good way—in a precise mathematical sense—to embed, pay attention and unembed words, we would never see anything remotely close to ChatGPT.

To give you an idea, ChatGPT has 175,181,292,520 parameters that it had to learn during its training process. Imagine a human (or group of humans) having to manually tweak this number of dials so that ChatGPT makes good predictions for every single text in the Internet. It would never happen.

On the bad side, this means that we have no idea what the model has learned. After training, ChatGPT is literally a collection of 175,181,292,520 number. What do they mean? What information does it know, and which does it not? Why did it learn that (0, 1, -2, 9.5) is a good way to embed "dog", and that if a sentence reads "my dog" this should change to (3, 2, -4, 4)?

We have no idea about this. This is one of the mysteries of LLMs and Deep Learning:

We do not know the meaning of the parameters of the model. But whatever they mean—whatever patterns they have absorbed—they happen to work remarkably well.

References

Andrej Karpathy’s talk Intro to LLMs has several examples of the weird way in which LLMs store information

If you are comfortable with math, 3Blue1Brown has an amazing series of videos on Deep Learning.

What do you want next?

In case you missed it

New Year, New Goals: 7 Science-Based Practices to Set and Achieve Goals in 2025

The Hidden Psychology of Change: How We Value Gains and Losses

The Limits of Democracy: What Arrow’s Impossibility Theorem Reveals about Fair Elections

The GPT in ChatGPT means from Generative Pre-trained Transformer. Generative means that it generates text; pre-trained means that it goes through a training phase where it learns how to do things, and then simply applies this knowledge to generate text given an input; and Transformer means that it uses this architecture that we have just described.

"En el lado negativo, esto significa que no tenemos idea de lo que el modelo ha aprendido"

Frase que me deja cuando menos pensativo. Parece que la realidad vuelve a superar a la ficción

Very interesting topic (again) and also, a very current one! It's kind of scary to think that this whole process is happening with humans knowing so little about it and not having too much control over it either 😥😥